#Serverless Streaming Solutions

Explore tagged Tumblr posts

Text

#Custom Python Runtime#Streaming with AWS Lambda#Amazon Streaming Services#Serverless Streaming Solutions#Streaming Architecture AWS#Spotify

0 notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Your Guide to Building a Future Proof Career with Cloud Computing Training in Pune

In today’s digital world, cloud computing is no longer just a buzzword it’s a game changer. From storing files to running complex applications, businesses of all sizes are moving to the cloud. And with this shift, there's a huge demand for professionals who understand cloud technologies inside and out. That’s where high quality cloud computing training in Pune can make all the difference.

Why Cloud Computing Skills Matter

Think about how much we rely on digital tools today emails, data storage, video streaming, even apps on your phone. Almost all of these are powered by cloud technology behind the scenes. Companies want systems that are fast, secure, scalable, and cost effective and cloud computing delivers all of that.

Because of this, cloud skills have become incredibly valuable in the job market. If you're just starting your career or looking to switch paths, learning cloud computing opens the door to exciting and high paying opportunities.

What You’ll Learn in Cloud Computing Training

The good news is that you don’t need to have years of tech experience to get started. Whether you're a recent graduate or a working professional, the right cloud computing training in Pune can help you build a solid foundation. Here’s what most training programs cover:

Basics of Cloud Platforms like AWS, Microsoft Azure, and Google Cloud

Core Concepts like virtualization, containers, deployment models, and serverless architecture

Real-World Projects to help you apply what you learn in a practical way

Certification Prep to get you ready for top industry certifications

And the best part? These programs are often designed to fit your schedule. Many offer weekend or evening batches, so you can learn without quitting your job.

Why Pune is a Great Place to Learn Cloud Computing

Pune is known not just for its educational institutions, but also for being one of India’s fastest growing IT hubs. With so many tech companies operating here, it’s the perfect environment for learning and networking.

The demand for skilled cloud professionals is growing every day, and local companies often hire directly from training programs. That means you’re not just learning you’re building real career opportunities while you're at it.

Who Should Consider This Training?

Honestly, almost anyone looking to grow in tech can benefit. This includes:

IT professionals who want to stay updated and in demand

Developers looking to expand into cloud or DevOps roles

Freshers trying to land their first job in tech

Non tech professionals looking for a career shift

No matter where you’re starting from, cloud computing training in Pune can help you level up.

Real Career Growth Starts Here

After completing your training, you'll be qualified for roles like:

Cloud Engineer

Solutions Architect

DevOps Engineer

Cloud Consultant

These aren’t just fancy job titles they come with great salaries, job security, and a lot of room to grow.

Conclusion

If you're looking to future proof your career and join one of the most in demand fields in tech, cloud computing training in Pune is the way to go. With the right guidance and hands on experience, you’ll be ready to take on real world challenges and stand out in the job market.

One institute that stands out in this space is Ethans Tech. Known for its experienced trainers, industry relevant curriculum, and strong placement support, Ethans Tech has helped thousands of learners take their first step into the cloud. If you're serious about building a career in cloud computing, Ethans Tech is the go to place to start.

Your journey to a rewarding tech career begins now don’t wait to take that first step.

#cloud computing classes in pune#cloud computing course in pune#cloud computing certification in pune#cloud computing training in pune

1 note

·

View note

Text

Big Lake Storage: An Open Data Lakehouse on Google Cloud

Large Lake Storage

Built open, high-performance, enterprise Big Lake storage Lakehouses iceberg native

Businesses may use Apache Iceberg to develop open, high-performance, enterprise-grade data lakehouses on Google Cloud with recent Big Lake storage engine improvements. Customers no longer have to choose between completely managed, enterprise-grade storage management and open formats like Apache Iceberg.

Businesses want adaptive, open, and interoperable architectures that let several engines work on a single copy of data while data management is revolutionised. Apache Iceberg is a popular open table style. The latest Big Lake storage development offers Apache Iceberg access to Google's infrastructure, enabling open data lakehouses.

Major advances include:

BigLake Metastore is normally available: BigLake Metastore, formerly BigQuery, is now public. This completely managed, serverless, and scalable solution simplifies runtime metadata maintenance and operations for BigQuery and other Iceberg-compatible engines. Use of Google's global metadata management infrastructure reduces the need to control proprietary metastore implementation. BigLake Metastore is necessary for open interoperability.

Iceberg REST Catalogue API Preview Introduction: To complement the GA Custom Iceberg Catalogue, the Iceberg REST Catalogue (Preview) provides a standard REST interface for interoperability. Users, including Spark users, can use the BigLake metastore as a serverless Iceberg catalogue. The Custom Iceberg Catalogue lets Spark and other open-source engines connect with Apache Iceberg and BigQuery BigLake tables.

Google Cloud is simplifying lakehouse upkeep using Apache Iceberg and Google Cloud Storage management. Cloud Storage features like auto-class tiering, encryption, and automatic table maintenance including compaction and trash collection are supported. This enhances Iceberg data management in Cloud Storage.

BigQuery usually has Apache Iceberg BigLake tables: These publicly available tables combine BigQuery's scalable, real-time metadata with Iceberg formats' transparency. This enables BigQuery's Write API's high-throughput streaming ingestion and zero-latency reads at tens of GiB/second. It also has automatic table management (compaction, garbage collection), native Vertex AI interface, auto-reclustering speed improvements, and future fine-grained DML and multi-table transactions (coming soon in preview). These tables maintain Iceberg's openness while providing controlled, enterprise-ready functionality. BigLake automatically creates and registers an Apache Iceberg V2 metadata snapshot in its metastore. This snapshot updates automatically after edits.

BigLake natively supports Dataplex Universal Catalogue for AI-Powered Governance. This interface provides consistent and fine-grained access restrictions to apply Dataplex governance standards across engines. Direct Cloud Storage access supports table-level access control, whereas BigQuery can use Storage API connectors for open-source engines for finer control. Dataplex integration improves BigQuery and BigLake Iceberg table governance with search, discovery, profiling, data quality checks, and end-to-end data lineage. Dataplex simplifies data discovery with AI-generated insights and semantic search. End-to-end governance benefits are automatic and don't require registration.

The BigLake metastore enables interoperability with BigQuery, AlloyDB (preview), Spark, and Flink. This increased compatibility allows AlloyDB users to easily consume analytical BigLake tables for Apache Iceberg from within AlloyDB (Preview). PostgreSQL users can link real-time AlloyDB transactional data with rich analytical data for operational and AI-driven use cases.

CME Group Executive Director Zenul Pomal noted, “We needed teams throughout the company to access data in a consistent and secure way – regardless of where it stored or what technologies they were using.” They used Google's BigLake. BigLake from Google was clear. The uniform layer for accessing data and a fully managed experience with enterprise capabilities via BigQuery are available without moving or duplicating data, whether the data is in traditional tables or open table formats like Apache Iceberg. Metadata quality is critical as it explores gen AI applications. BigLake Metastore and Data Catalogue help us preserve high-quality metadata.

At Google Cloud Next '25, Google Cloud announced support for change data capture, multi-statement transactions, and fine-grained DML in the coming months.

Google Cloud is evolving BigLake into a comprehensive storage engine that uses open-source, third-party, and Google Cloud services by eliminating trade-offs between open and managed data solutions. This boosts data and AI innovation.

#BigLakestorage#widelyaccessible#BigLakeMetastore#BigQuery#ApacheIceberg#AlloyDB#technology#technologynews#technews#news#govindhtech

0 notes

Text

Best Backend Frameworks for Web Development 2025: The Future of Scalable and Secure Web Applications

The backbone of any web application is its backend—handling data processing, authentication, server-side logic, and integrations. As the demand for high-performance applications grows, choosing the right backend framework becomes critical for developers and businesses alike. With continuous technological advancements, the best backend frameworks for web development 2025 focus on scalability, security, and efficiency.

To build powerful and efficient backend systems, developers also rely on various backend development tools and technologies that streamline development workflows, improve database management, and enhance API integrations.

This article explores the top backend frameworks in 2025, their advantages, and the essential tools that power modern backend development.

1. Why Choosing the Right Backend Framework Matters

A backend framework is a foundation that supports server-side functionalities, including:

Database Management – Handling data storage and retrieval efficiently.

Security Features – Implementing authentication, authorization, and encryption.

Scalability – Ensuring the system can handle growing user demands.

API Integrations – Connecting frontend applications and external services.

With various options available, selecting the right framework can determine how efficiently an application performs. Let’s explore the best backend frameworks for web development 2025 that dominate the industry.

2. Best Backend Frameworks for Web Development 2025

a) Node.js (Express.js & NestJS) – The JavaScript Powerhouse

Node.js remains one of the most preferred backend frameworks due to its non-blocking, event-driven architecture. It enables fast and scalable web applications, making it ideal for real-time apps.

Why Choose Node.js in 2025?

Asynchronous Processing: Handles multiple requests simultaneously, improving performance.

Rich Ecosystem: Thousands of NPM packages for rapid development.

Microservices Support: Works well with serverless architectures.

Best Use Cases

Real-time applications (Chat apps, Streaming platforms).

RESTful and GraphQL APIs.

Single Page Applications (SPAs).

Two popular Node.js frameworks:

Express.js – Minimalist and lightweight, perfect for API development.

NestJS – A modular and scalable framework built on TypeScript for enterprise applications.

b) Django – The Secure Python Framework

Django, a high-level Python framework, remains a top choice for developers focusing on security and rapid development. It follows the "batteries-included" philosophy, providing built-in features for authentication, security, and database management.

Why Choose Django in 2025?

Strong Security Features: Built-in protection against SQL injection and XSS attacks.

Fast Development: Auto-generated admin panels and ORM make development quicker.

Scalability: Optimized for handling high-traffic applications.

Best Use Cases

E-commerce websites.

Data-driven applications.

Machine learning and AI-powered platforms.

c) Spring Boot – The Java Enterprise Solution

Spring Boot continues to be a dominant framework for enterprise-level applications, offering a robust, feature-rich environment with seamless database connectivity and cloud integrations.

Why Choose Spring Boot in 2025?

Microservices Support: Ideal for distributed systems and large-scale applications.

High Performance: Optimized for cloud-native development.

Security & Reliability: Built-in authentication, authorization, and encryption mechanisms.

Best Use Cases

Enterprise applications and banking software.

Large-scale microservices architecture.

Cloud-based applications with Kubernetes and Docker.

d) Laravel – The PHP Framework That Keeps Evolving

Laravel continues to be the most widely used PHP framework in 2025. Its expressive syntax, security features, and ecosystem make it ideal for web applications of all sizes.

Why Choose Laravel in 2025?

Eloquent ORM: Simplifies database interactions.

Blade Templating Engine: Enhances frontend-backend integration.

Robust Security: Protects against common web threats.

Best Use Cases

CMS platforms and e-commerce websites.

SaaS applications.

Backend for mobile applications.

e) FastAPI – The Rising Star for High-Performance APIs

FastAPI is a modern, high-performance Python framework designed for building APIs. It has gained massive popularity due to its speed and ease of use.

Why Choose FastAPI in 2025?

Asynchronous Support: Delivers faster API response times.

Data Validation: Built-in support for type hints and request validation.

Automatic Documentation: Generates API docs with Swagger and OpenAPI.

Best Use Cases

Machine learning and AI-driven applications.

Data-intensive backend services.

Microservices and serverless APIs.

3. Essential Backend Development Tools and Technologies

To build scalable and efficient backend systems, developers rely on various backend development tools and technologies. Here are some must-have tools:

a) Database Management Tools

PostgreSQL – A powerful relational database system for complex queries.

MongoDB – A NoSQL database ideal for handling large volumes of unstructured data.

Redis – A high-speed in-memory database for caching.

b) API Development and Testing Tools

Postman – Simplifies API development and testing.

Swagger/OpenAPI – Generates interactive API documentation.

c) Containerization and DevOps Tools

Docker – Enables containerized applications for easy deployment.

Kubernetes – Automates deployment and scaling of backend services.

Jenkins – A CI/CD tool for continuous integration and automation.

d) Authentication and Security Tools

OAuth 2.0 / JWT – Secure authentication for APIs.

Keycloak – Identity and access management.

OWASP ZAP – Security testing tool for identifying vulnerabilities.

e) Performance Monitoring and Logging Tools

Prometheus & Grafana – Real-time monitoring and alerting.

Logstash & Kibana – Centralized logging and analytics.

These tools and technologies help developers streamline backend processes, enhance security, and optimize performance.

4. Future Trends in Backend Development

Backend development continues to evolve. Here are some key trends for 2025:

Serverless Computing – Cloud providers like AWS Lambda, Google Cloud Functions, and Azure Functions are enabling developers to build scalable, cost-efficient backends without managing infrastructure.

AI-Powered Backend Optimization – AI-driven database queries and performance monitoring are enhancing efficiency.

GraphQL Adoption – More applications are shifting from REST APIs to GraphQL for flexible data fetching.

Edge Computing – Backend processing is moving closer to the user, reducing latency and improving speed.

Thus, selecting the right backend framework is crucial for building modern, scalable, and secure web applications. The best backend frameworks for web development 2025—including Node.js, Django, Spring Boot, Laravel, and FastAPI—offer unique advantages tailored to different project needs.

Pairing these frameworks with cutting-edge backend development tools and technologies ensures optimized performance, security, and seamless API interactions. As web applications continue to evolve, backend development will play a vital role in delivering fast, secure, and efficient digital experiences.

0 notes

Text

Getting Started with Cloud-Native Data Processing Using DataStreamX

Transforming Data Streams with Cloudtopiaa’s Real-Time Infrastructure

In today’s data-driven world, the ability to process data in real time is critical for businesses aiming to stay competitive. Whether it’s monitoring IoT devices, analyzing sensor data, or powering intelligent applications, cloud-native data processing has become a game-changer. In this guide, we’ll explore how you can leverage DataStreamX, Cloudtopiaa’s robust data processing engine, for building scalable, real-time systems.

What is Cloud-Native Data Processing?

Cloud-native data processing is an approach where data is collected, processed, and analyzed directly on cloud infrastructure, leveraging the scalability, security, and flexibility of cloud services. This means you can easily manage data pipelines without worrying about physical servers or complex on-premises setups.

Key Benefits of Cloud-Native Data Processing:

Scalability: Easily process data from a few devices to thousands.

Low Latency: Achieve real-time insights without delays.

Cost-Efficiency: Pay only for the resources you use, thanks to serverless cloud technology.

Reliability: Built-in fault tolerance and data redundancy ensure uptime.

Introducing DataStreamX: Real-Time Infrastructure on Cloudtopiaa

DataStreamX is a powerful, low-code, cloud-native data processing engine designed to handle real-time data streams on Cloudtopiaa. It allows businesses to ingest, process, and visualize data in seconds, making it perfect for a wide range of applications:

IoT (Internet of Things) data monitoring

Real-time analytics for smart cities

Edge computing for industrial systems

Event-based automation for smart homes

Core Features of DataStreamX:

Real-Time Processing: Handle continuous data streams without delay.

Serverless Cloud Architecture: No need for complex server management.

Flexible Data Adapters: Connect easily with MQTT, HTTP, APIs, and more.

Scalable Pipelines: Process data from a few devices to thousands seamlessly.

Secure Infrastructure: End-to-end encryption and role-based access control.

Setting Up Your Cloud-Native Data Processing Pipeline

Follow these simple steps to create a data processing pipeline using DataStreamX on Cloudtopiaa:

Step 1: Log into Cloudtopiaa

Visit Cloudtopiaa Platform.

Access the DataStreamX dashboard.

Step 2: Create Your First Data Stream

Choose the type of data stream (e.g., MQTT for IoT data).

Set up your input source (sensors, APIs, cloud storage).

Step 3: Configure Real-Time Processing Rules

Define your processing logic (e.g., filter temperature data above 50°C).

Set triggers for real-time alerts.

Step 4: Visualize Your Data

Use Cloudtopiaa’s dashboard to see real-time data visualizations.

Customize your view with graphs, metrics, and alerts.

Real-World Use Case: Smart Home Temperature Monitoring

Imagine you have a smart home setup with temperature sensors in different rooms. You want to monitor these in real-time and receive alerts if temperatures exceed a safe limit.

Here’s how DataStreamX can help:

Sensors send temperature data to Cloudtopiaa.

DataStreamX processes the data in real-time.

If any sensor records a temperature above the set threshold, an alert is triggered.

The dashboard displays real-time temperature graphs, allowing you to monitor conditions instantly.

Best Practices for Cloud-Native Data Processing

Optimize Data Streams: Only collect and process necessary data.

Use Serverless Architecture: Avoid the hassle of managing servers.

Secure Your Streams: Use role-based access control and encrypted communication.

Visualize for Insight: Build real-time dashboards to monitor data trends.

Why Choose Cloudtopiaa for Real-Time Data Processing?

Cloudtopiaa’s DataStreamX offers a complete solution for cloud-native data processing with:

High Availability: Reliable infrastructure with minimal downtime.

Ease of Use: Low-code interface for quick setup.

Scalability: Seamlessly handle thousands of data streams.

Cost-Effective: Only pay for what you use.

Start Your Cloud-Native Data Journey Today

Ready to transform your data processing with cloud-native technology? With DataStreamX on Cloudtopiaa, you can create powerful, scalable, and secure data pipelines with just a few clicks.

👉 Get started with Cloudtopiaa and DataStreamX now: Cloudtopiaa Platform

#cloudtopiaa#CloudNative#DataProcessing#RealTimeData#Cloudtopiaa#DataStreamX#ServerlessCloud#SmartInfrastructure#EdgeComputing#DataAnalytics#TechInnovation

0 notes

Text

Cloud TV Market Size, Share, Analysis, Forecast, Growth 2032: Segment-wise Breakdown and Performance

Cloud TV Market was valued at USD 1.98 billion in 2023 and is expected to reach USD 12.24 Billion by 2032, growing at a CAGR of 22.44% from 2024-2032.

Cloud TV market is rapidly transforming the way consumers access and engage with digital content. With the convergence of over-the-top (OTT) media, cloud computing, and next-gen user experiences, Cloud TV has emerged as a dominant force reshaping traditional broadcasting models. Telecom operators, broadcasters, and media companies are increasingly turning to cloud-based solutions to streamline operations, lower infrastructure costs, and meet the surging demand for on-demand, multiscreen content delivery.

Cloud TV Market Poised for Dynamic Growth Amid Shifting Media Landscape as user expectations shift toward seamless, cross-platform experiences, Cloud TV continues to gain traction among content providers and consumers alike. The integration of advanced analytics, AI-based recommendations, and scalable delivery systems positions Cloud TV as a cornerstone of future media strategies. This evolution reflects not only technological innovation but also a deeper change in consumer behavior, driving widespread adoption across both developed and emerging markets.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3613

Market Keyplayers:

Brightcove – Brightcove Video Cloud

Akamai Technologies – Akamai Adaptive Media Delivery

Alibaba Group – Alibaba Cloud Video Streaming

Sony – PlayStation Vue

Zee Entertainment – ZEE5

Netflix – Netflix Streaming Service

Amazon – Amazon Prime Video

Google – YouTube TV

Apple – Apple TV+

Roku – Roku Streaming Platform

Vimeo – Vimeo OTT

Microsoft – Azure Media Services

Hulu – Hulu with Live TV

Disney – Disney+

Samsung Electronics – Samsung Smart TV

LG Electronics – LG WebOS

Comcast – Xfinity Stream

ViacomCBS – Paramount+

WarnerMedia – HBO Max

Sling TV – Sling TV Streaming Service

Market Analysis The Cloud TV market is witnessing significant momentum, driven by a growing appetite for on-demand and personalized content experiences. Service providers are leveraging cloud infrastructure to deliver content without the constraints of traditional satellite or cable systems. Additionally, cloud-native platforms enable faster time-to-market and operational flexibility, making them ideal for both new entrants and established players. The market's competitive landscape is characterized by strategic partnerships, mergers, and innovations aimed at enhancing user engagement and monetization.

Market Trends

Increasing adoption of hybrid monetization models (AVOD, SVOD, TVOD)

Proliferation of AI-powered content curation and voice-enabled navigation

Rising demand for low-latency streaming and ultra-HD content delivery

Growing use of serverless and microservices-based architecture

Expansion of cloud TV services in rural and underserved regions

Heightened emphasis on cybersecurity and DRM (digital rights management)

Market Scope The Cloud TV market spans a wide spectrum of applications, including live streaming, catch-up TV, network DVR, and interactive advertising. With capabilities extending beyond traditional video services, Cloud TV integrates seamlessly with IoT devices, smart TVs, mobile platforms, and wearable tech, fostering immersive viewing experiences. Enterprises are also leveraging Cloud TV platforms for internal communications, training, and brand engagement. The scope further extends into e-learning, fitness streaming, and live event broadcasting, reflecting its versatility.

Market Forecast The market is expected to undergo transformative growth over the coming years, powered by technological innovation, expanding internet penetration, and shifts in content consumption habits. Cloud-native deployments will play a pivotal role in enabling rapid scalability and geographic expansion. As content providers strive for agility and personalized delivery, the demand for modular, cloud-based TV ecosystems will only accelerate. The forecast period will also see increased investment in AI, edge computing, and 5G to enhance service quality and user retention.

Access Complete Report: https://www.snsinsider.com/reports/cloud-tv-market-3613

Conclusion Cloud TV is not just a technological upgrade—it's a revolution in the way media is produced, distributed, and consumed. For businesses, it presents an unmatched opportunity to redefine audience engagement and unlock new revenue streams. As the digital entertainment era matures, those who embrace Cloud TV now are poised to lead the next wave of innovation. In a world where content is king, Cloud TV is fast becoming the crown.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Machine Learning Infrastructure: The Foundation of Scalable AI Solutions

Introduction: Why Machine Learning Infrastructure Matters

In today's digital-first world, the adoption of artificial intelligence (AI) and machine learning (ML) is revolutionizing every industry—from healthcare and finance to e-commerce and entertainment. However, while many organizations aim to leverage ML for automation and insights, few realize that success depends not just on algorithms, but also on a well-structured machine learning infrastructure.

Machine learning infrastructure provides the backbone needed to deploy, monitor, scale, and maintain ML models effectively. Without it, even the most promising ML solutions fail to meet their potential.

In this comprehensive guide from diglip7.com, we’ll explore what machine learning infrastructure is, why it’s crucial, and how businesses can build and manage it effectively.

What is Machine Learning Infrastructure?

Machine learning infrastructure refers to the full stack of tools, platforms, and systems that support the development, training, deployment, and monitoring of ML models. This includes:

Data storage systems

Compute resources (CPU, GPU, TPU)

Model training and validation environments

Monitoring and orchestration tools

Version control for code and models

Together, these components form the ecosystem where machine learning workflows operate efficiently and reliably.

Key Components of Machine Learning Infrastructure

To build robust ML pipelines, several foundational elements must be in place:

1. Data Infrastructure

Data is the fuel of machine learning. Key tools and technologies include:

Data Lakes & Warehouses: Store structured and unstructured data (e.g., AWS S3, Google BigQuery).

ETL Pipelines: Extract, transform, and load raw data for modeling (e.g., Apache Airflow, dbt).

Data Labeling Tools: For supervised learning (e.g., Labelbox, Amazon SageMaker Ground Truth).

2. Compute Resources

Training ML models requires high-performance computing. Options include:

On-Premise Clusters: Cost-effective for large enterprises.

Cloud Compute: Scalable resources like AWS EC2, Google Cloud AI Platform, or Azure ML.

GPUs/TPUs: Essential for deep learning and neural networks.

3. Model Training Platforms

These platforms simplify experimentation and hyperparameter tuning:

TensorFlow, PyTorch, Scikit-learn: Popular ML libraries.

MLflow: Experiment tracking and model lifecycle management.

KubeFlow: ML workflow orchestration on Kubernetes.

4. Deployment Infrastructure

Once trained, models must be deployed in real-world environments:

Containers & Microservices: Docker, Kubernetes, and serverless functions.

Model Serving Platforms: TensorFlow Serving, TorchServe, or custom REST APIs.

CI/CD Pipelines: Automate testing, integration, and deployment of ML models.

5. Monitoring & Observability

Key to ensure ongoing model performance:

Drift Detection: Spot when model predictions diverge from expected outputs.

Performance Monitoring: Track latency, accuracy, and throughput.

Logging & Alerts: Tools like Prometheus, Grafana, or Seldon Core.

Benefits of Investing in Machine Learning Infrastructure

Here’s why having a strong machine learning infrastructure matters:

Scalability: Run models on large datasets and serve thousands of requests per second.

Reproducibility: Re-run experiments with the same configuration.

Speed: Accelerate development cycles with automation and reusable pipelines.

Collaboration: Enable data scientists, ML engineers, and DevOps to work in sync.

Compliance: Keep data and models auditable and secure for regulations like GDPR or HIPAA.

Real-World Applications of Machine Learning Infrastructure

Let’s look at how industry leaders use ML infrastructure to power their services:

Netflix: Uses a robust ML pipeline to personalize content and optimize streaming.

Amazon: Trains recommendation models using massive data pipelines and custom ML platforms.

Tesla: Collects real-time driving data from vehicles and retrains autonomous driving models.

Spotify: Relies on cloud-based infrastructure for playlist generation and music discovery.

Challenges in Building ML Infrastructure

Despite its importance, developing ML infrastructure has its hurdles:

High Costs: GPU servers and cloud compute aren't cheap.

Complex Tooling: Choosing the right combination of tools can be overwhelming.

Maintenance Overhead: Regular updates, monitoring, and security patching are required.

Talent Shortage: Skilled ML engineers and MLOps professionals are in short supply.

How to Build Machine Learning Infrastructure: A Step-by-Step Guide

Here’s a simplified roadmap for setting up scalable ML infrastructure:

Step 1: Define Use Cases

Know what problem you're solving. Fraud detection? Product recommendations? Forecasting?

Step 2: Collect & Store Data

Use data lakes, warehouses, or relational databases. Ensure it’s clean, labeled, and secure.

Step 3: Choose ML Tools

Select frameworks (e.g., TensorFlow, PyTorch), orchestration tools, and compute environments.

Step 4: Set Up Compute Environment

Use cloud-based Jupyter notebooks, Colab, or on-premise GPUs for training.

Step 5: Build CI/CD Pipelines

Automate model testing and deployment with Git, Jenkins, or MLflow.

Step 6: Monitor Performance

Track accuracy, latency, and data drift. Set alerts for anomalies.

Step 7: Iterate & Improve

Collect feedback, retrain models, and scale solutions based on business needs.

Machine Learning Infrastructure Providers & Tools

Below are some popular platforms that help streamline ML infrastructure: Tool/PlatformPurposeExampleAmazon SageMakerFull ML development environmentEnd-to-end ML pipelineGoogle Vertex AICloud ML serviceTraining, deploying, managing ML modelsDatabricksBig data + MLCollaborative notebooksKubeFlowKubernetes-based ML workflowsModel orchestrationMLflowModel lifecycle trackingExperiments, models, metricsWeights & BiasesExperiment trackingVisualization and monitoring

Expert Review

Reviewed by: Rajeev Kapoor, Senior ML Engineer at DataStack AI

"Machine learning infrastructure is no longer a luxury; it's a necessity for scalable AI deployments. Companies that invest early in robust, cloud-native ML infrastructure are far more likely to deliver consistent, accurate, and responsible AI solutions."

Frequently Asked Questions (FAQs)

Q1: What is the difference between ML infrastructure and traditional IT infrastructure?

Answer: Traditional IT supports business applications, while ML infrastructure is designed for data processing, model training, and deployment at scale. It often includes specialized hardware (e.g., GPUs) and tools for data science workflows.

Q2: Can small businesses benefit from ML infrastructure?

Answer: Yes, with the rise of cloud platforms like AWS SageMaker and Google Vertex AI, even startups can leverage scalable machine learning infrastructure without heavy upfront investment.

Q3: Is Kubernetes necessary for ML infrastructure?

Answer: While not mandatory, Kubernetes helps orchestrate containerized workloads and is widely adopted for scalable ML infrastructure, especially in production environments.

Q4: What skills are needed to manage ML infrastructure?

Answer: Familiarity with Python, cloud computing, Docker/Kubernetes, CI/CD, and ML frameworks like TensorFlow or PyTorch is essential.

Q5: How often should ML models be retrained?

Answer: It depends on data volatility. In dynamic environments (e.g., fraud detection), retraining may occur weekly or daily. In stable domains, monthly or quarterly retraining suffices.

Final Thoughts

Machine learning infrastructure isn’t just about stacking technologies—it's about creating an agile, scalable, and collaborative environment that empowers data scientists and engineers to build models with real-world impact. Whether you're a startup or an enterprise, investing in the right infrastructure will directly influence the success of your AI initiatives.

By building and maintaining a robust ML infrastructure, you ensure that your models perform optimally, adapt to new data, and generate consistent business value.

For more insights and updates on AI, ML, and digital innovation, visit diglip7.com.

0 notes

Text

Top 11 Elixir Services companies in the World in 2025

Introduction:

Elixir has become a highly scalable programming language in the ever-changing world of software development. Its concurrent and fault-tolerant architectural design gives Elixir an edge that is being recognized by many of today's tech giants. This article will delve into what constitutes the top Elixir services companies in the world in 2025 — companies with a spirit of innovation that have delivered reliable, high-performance solutions. This list tells you the trusted names in the industry, from hiring the best Elixir developers in the world to getting a trustworthy Elixir development company.

1. Icreativez Technologies

iCreativez is among the elite Elixir Services companies around the globe. It caters to startups, enterprises, and tech-driven firms with an impeccable custom software solution. Their team of well-seasoned Elixir engineers builds scalable backends, real-time apps, and distributed systems.

Key Services:

Elixir development tailored to client specifications.

Real-time application architecture.

Phoenix framework solutions.

DevOps integration with Elixir.

API and microservices development.

iCreativez is certainly not a run-of-the-mill Elixir development house; it is an agency for Elixir development that many businesses rely on for high performance, scalability, and innovation. Agile methodologies and great client communication have made them the preferred partner across the globe.

Contact Information:

website: www.icreativez.com

Founder: Mehboob Shar

Location: Pakistan

Service: 24/7 Availaible

2. Amazon Web Services

AWS uses Elixir in several backend processes and serverless applications, but mainly, they are known for cloud infrastructure. In fact, as the coolest among the Elixir fans, the company makes a dimension with the language's efficiency concerning tasks of real-time communication and data.

Specific Application of Elixir at AWS Includes:

Real-time serverless applications.

Lambda function enhancements.

Scalable messaging systems.

3. BigCommerce

Being one of the best e-commerce platforms, BigCommerce flaunts itself as one of the hot Elixir companies for 2025 due to the seamless use of Elixir in powering fast and reliable backend systems. The application has been made to operate with Elixir to improve their work from order processing and inventory management process to customer interaction.

Core Contributions:

APIs powered by Elixir

Inventory real-time updates

Order routing and automation

4. Arcadia

A data science-driven energy platform, Arcadia projects itself best as the Elixir development programming company, processing enormous, enormous data through complete and swift Elixir processing. They visualize the concurrency properties of the language to stream energy data over distributed pipelines.

Services Include:

Energy data analytics

Real-time performance dashboards

Integration of Elixir and machine learning

5. Altium

Altium has the latest PCB design software. Their engineering team has gone to Elixir for their backend tooling and simulation environments. The incorporation of the Elixir technology into high-computation design environments marks them out as a top Elixir development company.

Key Solutions:

Real-time circuit simulations

API integration

Collaborative hardware design tools

6. SmartBear

SmartBear is a known brand in the tool industry for Swagger and TestComplete. The company has used Elixir in the development of test automation platforms and scalable analytics. This focus on developer experience places them among the best Elixir Services companies worldwide.

Specializations:

Automated testing platforms

Elixir backend for QA dashboards

Developer support tools

7. migSO

MigSO is a project management and engineering company using Elixir to manage complex industrial and IT projects. Custom Elixir solutions allow organizations to keep real-time tab on large-scale project metrics.

Notable Features:

Real-time monitoring systems

Industrial process integration

Elixir dashboards for reporting

8. Digis

Digis is a global software development company that offers dedicated Elixir teams. They are well known to be a very efficient Elixir development agency that provides customized staffing for businesses that need to rapidly scale in a cost-effective manner.

Core Services:

Dedicated Elixir teams

Agile product development

Custom application architecture

9. Mobitek Marketing Group

Mobitek integrates Elixir with their MarTech to real-time data processing, campaigns tracking, and audience segmentation. As a contemporary digital company, they proceed with Elixir in the development of intelligent and adaptive marketing platforms.

Expertise includes:

Real-time data tracking

Campaign performance backend

CRM development platforms powered by Elixir

10. Green Apex

Green Apex must have been in Elixir Development Services across eCommerce, fintech, and healthcare for full-stack development since always. They write applications that can accommodate thousands of concurrent users with apparent ease.

Notable Strengths:

Realtime chat and notification

Microservices building

Scalable cloud apps

11. iDigitalise - Digital Marketing Agency

iDigitalise is known first as a digital marketing agency; however, they have also built some strong analytical and automated platforms using Elixir. The use of Elixir puts them among a few very hidden gems when it comes to considering top Elixir development companies and developers.

What They Have:

Elixir-supported analytic dashboards

Backend for marketing automation

SEO and campaign data processing in real-time

What Is So Special About Elixir in 2025?

It is often said that with an increasing number of companies worldwide turning towards Elixir, such popularity is hardly incidental. With the low-latency e-commerce handling of huge concurrency, Elixir finds its niche in industry verticals, including fintech, healthcare, eCommerce, and IoT.

For the best Elixir services in the world, differentiate between the ones with Elixir developers and, in addition, have proven records of practical application with worked-out projects using Elixir in complex real-world applications.

FAQs:

Q1: What are the best Elixir development companies in 2025?

Companies specializing in scalable, real-time Elixir applications, such as iCreativez, Digis, and Green Apex, are all mentioned among the very best.

Q2: Why does Elixir appeal to top companies?

Elixir is propitious for contemporary purposes whereby the require of applications extends beyond the handling and manipulating of real-time data to the aspect of engaging users.

Q3: Which industries benefit the most from implementing Elixir?

Fintech, e-commerce, healthcare, and data analytics would maximize the use of Elixir due to its concurrency benefits.

Q4: How do I select a competent Elixir development agency?

Choose an agency that has solid portfolios, some expertise in real-time systems, and some experience using Elixir with frameworks like Phoenix.

Conclusion

Elixir indeed urges organizations to rethink their strategies regarding backend development and real-time systems. The world's elite Elixir services superstars not only bring great software but also continue to set the standards for performance, scalability, and innovation in the year 2025.

Whether you're a startup looking for the best Elixir development company the above-mentioned companies are indeed the role models. Among them, iCreativez remains a clear leader, setting trends and delivering world-class Elixir solutions across the globe.

0 notes

Text

Networking in Google Cloud: Build Scalable, Secure, and Cloud-Native Connectivity in 2025

Let’s get real—cloud is the new data center, and Networking in Google Cloud is where the magic happens. After more than 8 years working across cloud and enterprise networking, I can tell you one thing: when it comes to scalability, performance, and global reach, Google Cloud’s networking stack is in a league of its own.

Whether you’re a network architect, cloud engineer, or just stepping into GCP, understanding Google Cloud networking isn’t optional—it’s essential.

“Cloud networking isn't just a new skill—it's a whole new mindset.”

🌐 What Does "Networking in Google Cloud" Actually Mean?

It’s the foundation of everything you build in GCP. Every VM, container, database, and microservice—they all rely on your network architecture. Google Cloud offers a software-defined, globally distributed network that enables you to design fast, secure, and scalable solutions, whether for enterprise workloads or high-traffic web apps.

Here’s what GCP networking brings to the table:

Global VPCs – unlike other clouds, Google gives you one VPC across regions. No stitching required.

Cloud Load Balancing – scalable to millions of QPS, fully distributed, global or regional.

Hybrid Connectivity – via Cloud VPN, Cloud Interconnect, and Partner Interconnect.

Private Google Access – so you can access Google APIs securely from private IPs.

Traffic Director – Google’s fully managed service mesh traffic control plane.

“The cloud is your data center. Google Cloud makes your network borderless.”

👩💻 Who Should Learn Google Cloud Networking?

Cloud Network Engineers & Architects

DevOps & Site Reliability Engineers

Security Engineers designing secure perimeter models

Enterprises shifting from on-prem to hybrid/multi-cloud

Developers working with serverless, Kubernetes (GKE), and APIs

🧠 What You’ll Learn & Use

In a typical “Networking in Google Cloud” course or project, you’ll master:

Designing and managing VPCs and subnet architectures

Configuring firewall rules, routes, and NAT

Using Cloud Armor for DDoS protection and security policies

Connecting workloads across regions using Shared VPCs and Peering

Monitoring and logging network traffic with VPC Flow Logs and Packet Mirroring

Securing traffic with TLS, identity-based access, and Service Perimeters

“A well-architected cloud network is invisible when it works and unforgettable when it doesn’t.”

🔗 Must-Check Google Cloud Networking Resources

👉 Google Cloud Official Networking Docs

👉 Google Cloud VPC Overview

👉 Google Cloud Load Balancing

👉 Understanding Network Service Tiers

👉 NetCom Learning – Google Cloud Courses

👉 Cloud Architecture Framework – Google Cloud Blog

🏢 Real-World Impact

Streaming companies use Google’s premium tier to deliver low-latency video globally

Banks and fintechs depend on secure, hybrid networking to meet compliance

E-commerce giants scale effortlessly during traffic spikes with global load balancers

Healthcare platforms rely on encrypted VPNs and Private Google Access for secure data transfer

“Your cloud is only as strong as your network architecture.”

🚀 Final Thoughts

Mastering Networking in Google Cloud doesn’t just prepare you for certifications like the Professional Cloud Network Engineer—it prepares you for real-world, high-performance, enterprise-grade environments.

With global infrastructure, powerful automation, and deep security controls, Google Cloud empowers you to build cloud-native networks like never before.

“Don’t build in the cloud. Architect with intention.” – Me, after seeing a misconfigured firewall break everything 😅

So, whether you're designing your first VPC or re-architecting an entire global system, remember: in the cloud, networking is everything. And with Google Cloud, it’s better, faster, and more secure.

Let’s build it right.

1 note

·

View note

Text

Serverless Computing Market Growth Analysis and Forecast Report 2032

The Serverless Computing Market was valued at USD 19.30 billion in 2023 and is expected to reach USD 70.52 billion by 2032, growing at a CAGR of 15.54% from 2024-2032.

The serverless computing market has gained significant traction over the last decade as organizations increasingly seek to build scalable, agile, and cost-effective applications. By allowing developers to focus on writing code without managing server infrastructure, serverless architecture is reshaping how software and cloud applications are developed and deployed. Cloud service providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are at the forefront of this transformation, offering serverless solutions that automatically allocate computing resources on demand. The flexibility, scalability, and pay-as-you-go pricing models of serverless platforms are particularly appealing to startups and enterprises aiming for digital transformation and faster time-to-market.

Serverless Computing Market adoption is expected to continue rising, driven by the surge in microservices architecture, containerization, and event-driven application development. The market is being shaped by the growing demand for real-time data processing, simplified DevOps processes, and enhanced productivity. As cloud-native development becomes more prevalent across industries such as finance, healthcare, e-commerce, and media, serverless computing is evolving from a developer convenience into a strategic advantage. By 2032, the market is forecast to reach unprecedented levels of growth, with organizations shifting toward Function-as-a-Service (FaaS) and Backend-as-a-Service (BaaS) to streamline development and reduce operational overhead.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/5510

Market Keyplayers:

AWS (AWS Lambda, Amazon S3)

Microsoft (Azure Functions, Azure Logic Apps)

Google Cloud (Google Cloud Functions, Firebase)

IBM (IBM Cloud Functions, IBM Watson AI)

Oracle (Oracle Functions, Oracle Cloud Infrastructure)

Alibaba Cloud (Function Compute, API Gateway)

Tencent Cloud (Cloud Functions, Serverless MySQL)

Twilio (Twilio Functions, Twilio Studio)

Cloudflare (Cloudflare Workers, Durable Objects)

MongoDB (MongoDB Realm, MongoDB Atlas)

Netlify (Netlify Functions, Netlify Edge Functions)

Fastly (Compute@Edge, Signal Sciences)

Akamai (Akamai EdgeWorkers, Akamai Edge Functions)

DigitalOcean (App Platform, Functions)

Datadog (Serverless Monitoring, Real User Monitoring)

Vercel (Serverless Functions, Edge Middleware)

Spot by NetApp (Ocean for Serverless, Elastigroup)

Elastic (Elastic Cloud, Elastic Observability)

Backendless (Backendless Cloud, Cloud Code)

Faundb (Serverless Database, Faundb Functions)

Scaleway (Serverless Functions, Object Storage)

8Base (GraphQL API, Serverless Back-End)

Supabase (Edge Functions, Supabase Realtime)

Appwrite (Cloud Functions, Appwrite Database)

Canonical (Juju, MicroK8s)

Market Trends

Several emerging trends are driving the momentum in the serverless computing space, reflecting the industry's pivot toward agility and innovation:

Increased Adoption of Multi-Cloud and Hybrid Architectures: Organizations are moving beyond single-vendor lock-in, leveraging serverless computing across multiple cloud environments to increase redundancy, flexibility, and performance.

Edge Computing Integration: The fusion of serverless and edge computing is enabling faster, localized data processing—particularly beneficial for IoT, AI/ML, and latency-sensitive applications.

Advancements in Developer Tooling: The rise of open-source frameworks, CI/CD integration, and observability tools is enhancing the developer experience and reducing the complexity of managing serverless applications.

Serverless Databases and Storage: Innovations in serverless data storage and processing, including event-driven data lakes and streaming databases, are expanding use cases for serverless platforms.

Security and Compliance Enhancements: With growing concerns over data privacy, serverless providers are focusing on end-to-end encryption, policy enforcement, and secure API gateways.

Enquiry of This Report: https://www.snsinsider.com/enquiry/5510

Market Segmentation:

By Enterprise Size

Large Enterprise

SME

By Service Model

Function-as-a-Service (FaaS)

Backend-as-a-Service (BaaS)

By Deployment

Private Cloud

Public Cloud

Hybrid Cloud

By End-user Industry

IT & Telecommunication

BFSI

Retail

Government

Industrial

Market Analysis

The primary growth drivers include the widespread shift to cloud-native technologies, the need for operational efficiency, and the rising number of digital-native enterprises. Small and medium-sized businesses, in particular, benefit from the low infrastructure management costs and scalability of serverless platforms.

North America remains the largest regional market, driven by early adoption of cloud services and strong presence of major tech giants. However, Asia-Pacific is emerging as a high-growth region, fueled by growing IT investments, increasing cloud literacy, and the rapid expansion of e-commerce and mobile applications. Key industry verticals adopting serverless computing include banking and finance, healthcare, telecommunications, and media.

Despite its advantages, serverless architecture comes with challenges such as cold start latency, vendor lock-in, and monitoring complexities. However, advancements in runtime management, container orchestration, and vendor-agnostic frameworks are gradually addressing these limitations.

Future Prospects

The future of the serverless computing market looks exceptionally promising, with innovation at the core of its trajectory. By 2032, the market is expected to be deeply integrated with AI-driven automation, allowing systems to dynamically optimize workloads, security, and performance in real time. Enterprises will increasingly adopt serverless as the default architecture for cloud application development, leveraging it not just for backend APIs but for data science workflows, video processing, and AI/ML pipelines.

As open standards mature and cross-platform compatibility improves, developers will enjoy greater freedom to move workloads across different environments with minimal friction. Tools for observability, governance, and cost optimization will become more sophisticated, making serverless computing viable even for mission-critical workloads in regulated industries.

Moreover, the convergence of serverless computing with emerging technologies—such as 5G, blockchain, and augmented reality—will open new frontiers for real-time, decentralized, and interactive applications. As businesses continue to modernize their IT infrastructure and seek leaner, more responsive architectures, serverless computing will play a foundational role in shaping the digital ecosystem of the next decade.

Access Complete Report: https://www.snsinsider.com/reports/serverless-computing-market-5510

Conclusion

Serverless computing is no longer just a developer-centric innovation—it's a transformative force reshaping the global cloud computing landscape. Its promise of simplified operations, cost efficiency, and scalability is encouraging enterprises of all sizes to rethink their application development strategies. As demand for real-time, responsive, and scalable solutions grows across industries, serverless computing is poised to become a cornerstone of enterprise digital transformation. With continued innovation and ecosystem support, the market is set to achieve remarkable growth and redefine how applications are built and delivered in the cloud-first era.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Real-time Data Processing with Azure Stream Analytics

Introduction

The current fast-paced digital revolution demands organizations to handle occurrences in real-time. The processing of real-time data enables organizations to detect malicious financial activities and supervise sensor measurements and webpage user activities which enables quicker and more intelligent business choices.

Microsoft’s real-time analytics service Azure Stream Analytics operates specifically to analyze streaming data at high speed. The introduction explains Azure Stream Analytics system architecture together with its key features and shows how users can construct effortless real-time data pipelines.

What is Azure Stream Analytics?

Algorithmic real-time data-streaming functions exist as a complete serverless automation through Azure Stream Analytics. The system allows organizations to consume data from different platforms which they process and present visual data through straightforward SQL query protocols.

An Azure data service connector enables ASA to function as an intermediary which processes and connects streaming data to emerging dashboards as well as alarms and storage destinations. ASA facilitates processing speed and immediate response times to handle millions of IoT device messages as well as application transaction monitoring.

Core Components of Azure Stream Analytics

A Stream Analytics job typically involves three major components:

1. Input

Data can be ingested from one or more sources including:

Azure Event Hubs – for telemetry and event stream data

Azure IoT Hub – for IoT-based data ingestion

Azure Blob Storage – for batch or historical data

2. Query

The core of ASA is its SQL-like query engine. You can use the language to:

Filter, join, and aggregate streaming data

Apply time-window functions

Detect patterns or anomalies in motion

3. Output

The processed data can be routed to:

Azure SQL Database

Power BI (real-time dashboards)

Azure Data Lake Storage

Azure Cosmos DB

Blob Storage, and more

Example Use Case

Suppose an IoT system sends temperature readings from multiple devices every second. You can use ASA to calculate the average temperature per device every five minutes:

This simple query delivers aggregated metrics in real time, which can then be displayed on a dashboard or sent to a database for further analysis.

Key Features

Azure Stream Analytics offers several benefits:

Serverless architecture: No infrastructure to manage; Azure handles scaling and availability.

Real-time processing: Supports sub-second latency for streaming data.

Easy integration: Works seamlessly with other Azure services like Event Hubs, SQL Database, and Power BI.

SQL-like query language: Low learning curve for analysts and developers.

Built-in windowing functions: Supports tumbling, hopping, and sliding windows for time-based aggregations.

Custom functions: Extend queries with JavaScript or C# user-defined functions (UDFs).

Scalability and resilience: Can handle high-throughput streams and recovers automatically from failures.

Common Use Cases

Azure Stream Analytics supports real-time data solutions across multiple industries:

Retail: Track customer interactions in real time to deliver dynamic offers.

Finance: Detect anomalies in transactions for fraud prevention.

Manufacturing: Monitor sensor data for predictive maintenance.

Transportation: Analyze traffic patterns to optimize routing.

Healthcare: Monitor patient vitals and trigger alerts for abnormal readings.

Power BI Integration

The most effective connection between ASA and Power BI serves as a fundamental feature. Asustream Analytics lets users automatically send data which Power BI dashboards update in fast real-time. Operations teams with managers and analysts can maintain ongoing key metric observation through ASA since it allows immediate threshold breaches to trigger immediate action.

Best Practices

To get the most out of Azure Stream Analytics:

Use partitioned input sources like Event Hubs for better throughput.

Keep queries efficient by limiting complex joins and filtering early.

Avoid UDFs unless necessary; they can increase latency.

Use reference data for enriching live streams with static datasets.

Monitor job metrics using Azure Monitor and set alerts for failures or delays.

Prefer direct output integration over intermediate storage where possible to reduce delays.

Getting Started

Setting up a simple ASA job is easy:

Create a Stream Analytics job in the Azure portal.

Add inputs from Event Hub, IoT Hub, or Blob Storage.

Write your SQL-like query for transformation or aggregation.

Define your output—whether it’s Power BI, a database, or storage.

Start the job and monitor it from the portal.

Conclusion

Organizations at all scales use Azure Stream Analytics to gain processing power for real-time data at levels suitable for business operations. Azure Stream Analytics maintains its prime system development role due to its seamless integration of Azure services together with SQL-based declarative statements and its serverless architecture.

Stream Analytics as a part of Azure provides organizations the power to process ongoing data and perform real-time actions to increase operational intelligence which leads to enhanced customer satisfaction and improved market positioning.

#azure data engineer course#azure data engineer course online#azure data engineer online course#azure data engineer online training#azure data engineer training#azure data engineer training online#azure data engineering course#azure data engineering online training#best azure data engineer course#best azure data engineer training#best azure data engineering courses online#learn azure data engineering#microsoft azure data engineer training

0 notes

Text

Scalable Data Lake Consulting Services for Modern Businesses

Visit Site Now - https://goognu.com/services/data-lake-consulting-services

Unlock the full potential of your data with our Data Lake Consulting Services. We help organizations design, implement, and manage scalable data lakes to efficiently store, process, and analyze structured and unstructured data in real time.

Our services cover data lake architecture, data ingestion, governance, security, and analytics integration. Whether you are starting from scratch or modernizing an existing data infrastructure, we provide tailored solutions that align with your business objectives.

We specialize in cloud-based data lakes on AWS, Azure, and Google Cloud, ensuring seamless integration with data warehouses, analytics platforms, and AI/ML workflows. Our experts design ETL pipelines, real-time data streaming, and automated data processing to enhance efficiency and scalability.

Security is a top priority in our approach. We implement access controls, encryption, and compliance frameworks to protect sensitive data. Our data governance strategies ensure proper classification, cataloging, and regulatory adherence, such as GDPR, HIPAA, and SOC 2.

With our cost optimization techniques, we help businesses manage storage expenses while maximizing performance. Using serverless computing, object storage, and advanced data lifecycle policies, we ensure cost-efficient and high-performing data lakes.

0 notes

Text

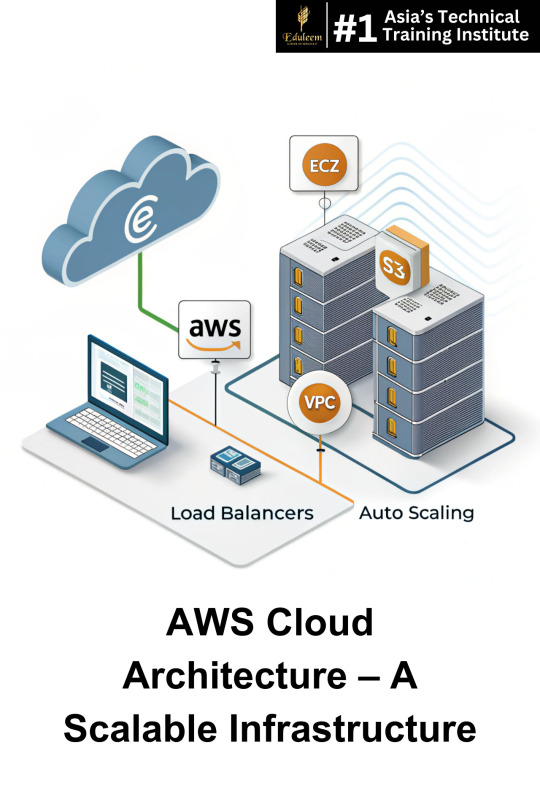

AWS Training in Bangalore: Architecting Fundamentals Explained

Mastering AWS Infrastructure for Scalable Cloud Solutions

Learn AWS Cloud Architecting from the best AWS training institute in Bangalore with Eduleem.

In today’s cloud-driven world, AWS (Amazon Web Services) dominates the industry with its scalability, security, and flexibility. Whether you're a developer, system administrator, or IT professional, mastering AWS infrastructure is key to advancing your career.

By enrolling in an AWS training course in Bangalore, professionals gain expertise in cloud computing, networking, and security. Learning from an industry-recognized AWS institute in Bangalore prepares individuals to architect and deploy fault-tolerant, scalable AWS solutions.

Understanding AWS Infrastructure: Core Components

1️⃣ Compute Services: Powering Cloud Applications

Amazon EC2 (Elastic Compute Cloud): Virtual servers for running applications with flexible scalability.

AWS Lambda: Serverless compute service for automatic scaling without infrastructure management.

2️⃣ Storage Solutions: Secure & Scalable Data Management

Amazon S3 (Simple Storage Service): Object storage for secure and cost-effective data storage.

Amazon EBS (Elastic Block Store): Persistent storage volumes for EC2 instances.

3️⃣ Networking & Security: Ensuring Seamless Connectivity

Amazon VPC (Virtual Private Cloud): Isolated cloud resources for enhanced security.

AWS IAM (Identity & Access Management): Granular access control to AWS resources.

4️⃣ Load Balancing & Auto Scaling: Maximizing Performance

Elastic Load Balancer (ELB): Distributes traffic across multiple EC2 instances for reliability.

Auto Scaling Groups: Automatically adjusts capacity based on demand.

5️⃣ Database Services: Optimizing Data Handling

Amazon RDS (Relational Database Service): Managed database solutions for high availability.

DynamoDB: A NoSQL database for scalable, low-latency applications.

Prepare for the AWS Certified Solutions Architect Exam Want to master AWS Cloud Architecting? Check out our AWS Certified Solutions Architect—Associate Exam: Preparation Guide and get ready for certification!

How AWS Infrastructure Benefits Businesses

📌 Netflix: Uses AWS Auto Scaling and EC2 instances to manage millions of daily video streams. 📌 Airbnb: Leverages Amazon S3, RDS, and CloudFront for seamless global operations. 📌 Spotify: Implements AWS Lambda and DynamoDB for highly efficient music streaming services.

With AWS being the backbone of global enterprises, professionals skilled in AWS cloud computing are in high demand. Learning from the best AWS training institute in Bangalore can open doors to high-paying roles.

Why Choose Eduleem for AWS Training in Bangalore?

Eduleem offers the best AWS training in Bangalore, providing:

🔥 Practical AWS Labs: Work on real-world cloud projects with expert guidance. 📜 Industry-Certified Training: Get trained by AWS-certified professionals. 🔧 Hands-on Cloud Experience: Master AWS EC2, S3, VPC, IAM, and Auto Scaling. 🚀 Career Support & Placement Assistance: Secure top cloud computing jobs.

Whether you're aiming to become a cloud architect, AWS engineer, or solutions architect, learning from the best AWS training institute in Bangalore will help you excel in AWS training and certification.

Conclusion: Build a Future-Proof Career in AWS with Eduleem

AWS expertise is crucial for IT professionals and businesses alike. If you're looking to master AWS architecture, enrolling in the best AWS course in Bangalore is your next step.

🎯 Join Eduleem’s AWS Training Today!

#aws#azure#cloudsecurity#cloudsolutions#devops#eduleem#AWSTraining#CloudComputing#AWS#DevOps#AWSCourse#CloudArchitect#BestAWSTraining#Eduleem#AWSInfrastructure#AmazonWebServices

0 notes

Text

Custom IoT Development Services for Smarter Enterprises

In an era where intelligence meets automation, the Internet of Things (IoT) stands as a transformative force, connecting devices, people, and systems like never before. Businesses across industries are leveraging IoT to enhance operations, deliver better customer experiences, and unlock new revenue streams.

At Mobiloitte, we deliver comprehensive, secure, and scalable IoT Development Services to bring your connected ideas to life. As a full-cycle IoT development company, we specialize in building high-performance, data-driven solutions that allow enterprises to monitor, automate, and control their operations in real-time.

Whether you're a startup building a smart product or an enterprise seeking end-to-end automation, Mobiloitte is the ideal IoT app development company to help you lead in the connected economy.

What is IoT Development?

IoT development involves creating ecosystems where physical devices—like sensors, machinery, wearables, and appliances—are connected to the internet and to each other. These devices collect and exchange data, which is analyzed to generate actionable insights.

Key components of an IoT solution include:

Hardware (Sensors & Devices)

Connectivity (Wi-Fi, BLE, Zigbee, 5G, NB-IoT)

IoT Gateways

Cloud Infrastructure

Mobile/Web Applications

Data Analytics & AI Integration

As an expert IoT application development company, Mobiloitte handles the complete development lifecycle—from hardware integration and cloud setup to mobile app development and predictive analytics.

Why Choose Mobiloitte as Your IoT Development Company?

✅ End-to-End IoT Expertise

We deliver full-stack IoT solutions—from edge devices to cloud platforms and business intelligence dashboards.

✅ Cross-Industry IoT Experience

We’ve delivered IoT projects in smart manufacturing, healthcare, automotive, energy, retail, logistics, and more.

✅ Hardware + Software Proficiency

Our team understands both the embedded systems behind IoT hardware and the software layers that power smart applications.

✅ Secure & Scalable Architectures

We build secure, scalable systems ready to handle thousands of devices and millions of data points.

✅ Cloud-Native & Edge-Ready

We work with AWS IoT, Azure IoT Hub, and Google Cloud IoT, and deploy edge computing when real-time local data processing is needed.

Our IoT Application Development Services

Mobiloitte offers a complete suite of IoT application development services, covering everything from device firmware to cloud and mobile interfaces.

🔹 1. IoT Consulting & Strategy

We evaluate your business goals, tech readiness, and use cases to build a future-ready IoT roadmap.

Feasibility analysis

Technology stack selection

Use-case definition

Compliance & security planning

🔹 2. Hardware Integration & Prototyping

Our team integrates and configures IoT sensors, actuators, and embedded devices tailored to your project needs.

PCB design & prototyping

Microcontroller programming

Sensor calibration

BLE/NB-IoT/LoRaWAN integration

🔹 3. IoT App Development (Mobile/Web)

We develop cross-platform mobile and web applications that enable users to monitor and control devices in real time.

React Native, Flutter, Swift, Kotlin, Angular

Device dashboards & alerts

Remote control features

Data visualization & analytics

🔹 4. Cloud & Backend Development

We build cloud-based platforms that receive, process, store, and visualize sensor data securely and efficiently.

AWS IoT, Azure IoT Hub, Google Cloud IoT

MQTT, CoAP, HTTP/RESTful APIs

Serverless architecture

Auto-scaling & data backups

🔹 5. Data Analytics & AI Integration

Our IoT systems are enhanced with AI/ML to provide predictive analytics, anomaly detection, and intelligent automation.

Predictive maintenance

Pattern recognition

Real-time alerts and automation

Smart decision-making

🔹 6. IoT Testing & QA